OpenCV & Structure from motion

Ideas for implementation

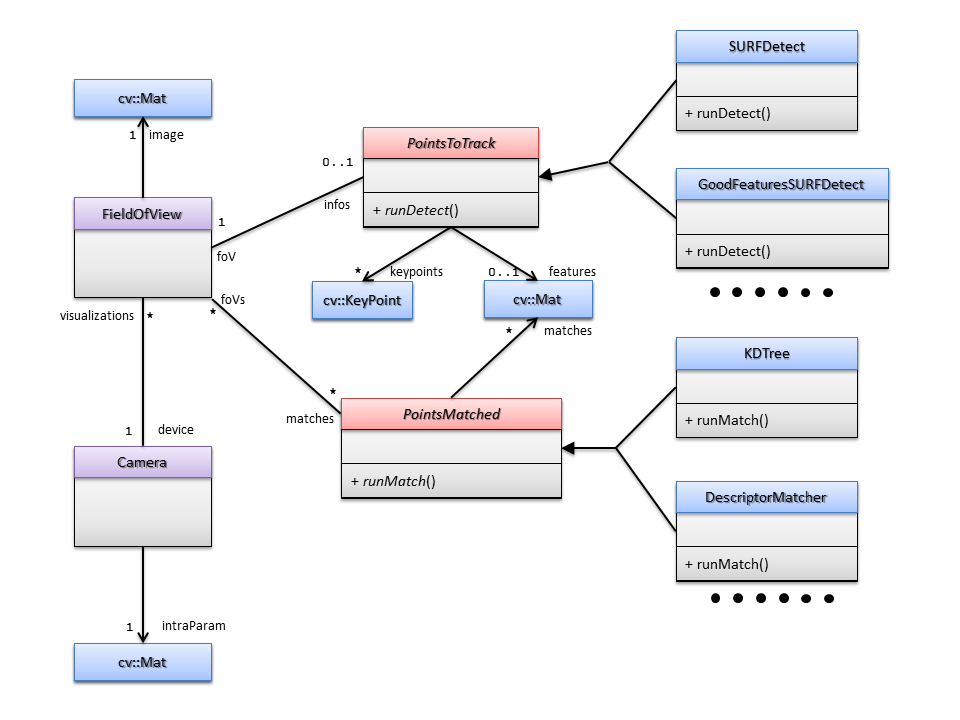

To be consistent with what has been stated above, I identified five processing blocks that can be separated:- PointsToTrack: given an image, this block must be able to find points and/or features to track. OpenCV has already a lot of traitments, just have to create wrappers...

- PointsMatched: given points and/or features, this block must be able to find correspondances. If only images are known, use first PointsToTrack to find points... OpenCV has a lot of algo too, so not a lot of work.

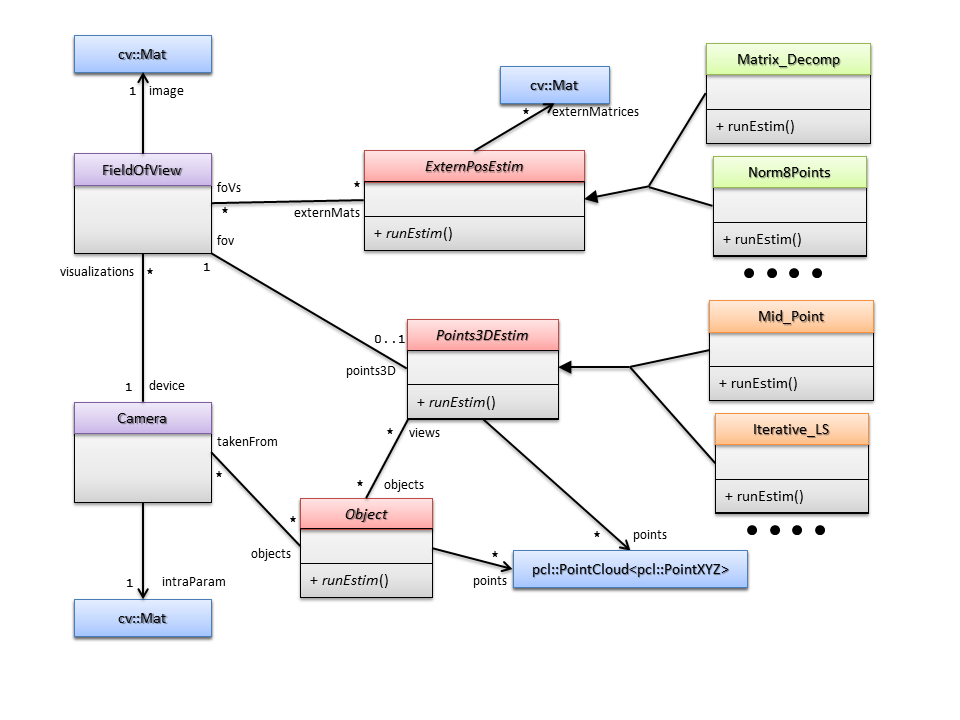

- ExternPosEstim: given points and/or intra parameters of cameras and/or matches and/or known 3D points, this block have to estimate the extern parameters of cameras (position, orientation) and intra parameters if needed. Same as before, a lot of methods exist in OpenCV or using toolbox of Mr Rabaud!

- Points3DEstim: given 2D points and/or correspondances and/or intra parameters and/or extern parameters and/or known 3D points, this block have to estimate the 3D positions of each 2D points sets. Various methods exists (Middle point, iterative least square...), choice of methods has to be done.

- Object: given 3D points from differents field of view and/without correspondances, find commun objects between views. A lot of algorithms exist in PCL, so just need wrappers...

Timeline and keystones

In order to understand how I planed to implemente the different blocks, I decided to present the keystones on a timeline...Week 1:

Exchange test data (videos, internals parameters of cameras, sequences fully parameterized...) with mentor.

Exchange informations from studients working on converters for OpenCV and PCL.

Working on a first 3D visualization (simple, just to be able to debug algorithms). Probably just a wrapper of PCLVisualizer

Week 2:

Focus on 2D algorithms: Features and points detections, matching... Compare and choose a good compromise between pcl::Keypoint, cv::Point and a personal new class (PointsToTrack)

Begin the implementation of Camera and FieldOfView (see class diagram below). Check for ideas in the pcl_visualization namespace (in particular Camera)

Week 3:

Focus on simple case: the object is planar -> homography is sufficent to model the images relations. I have already coded the most part of this algo, only camera positions are missing (but easy to add).

Try to add a surface variation estimation using dense depth estimation to the previous surface found.

I have already coded a super-resolution algorithm for document image (supposed planar) so the implementation will not be a problem.

Week 4:

Begin with simple SfM algorithms: no missing points match, no errors (matrix factorization). Just an orthographic reconstruction.

Check how to use various infos on structure (internal camera parameters, numbers of cameras...) to improve structure (projective reconstruction?).

Week 5-6:

Using previous SfM algo, try find meshes and add textures.

Improve the 3D visualization method.

Week 7:

Write tests, improve documentation...

Mid-term evaluation.

Week 8-10:

Projective reconstruction.

Week 11-End:

Dense objects estimation with texture.

Visualization of 3D objects using camera motion (If time, try to improve camera motion using splines).

Write tests, improve documentation...

Final evaluation.

Preliminary version of class digrams

Focus on features and points detections:

Focus on 3D estimation:

Examples

Let's play with cameras :

//Camera creation :

Camera cam(WEBCAM, 0);//camera from webcam 0

Camera cam1(AVI_FLUX,"video1.avi");//camera from video

Camera cam2(PICT_FLUX,"prefixe",".png",0,100);//camera from images (prefixe0.png -> prefixe100.png)

How will we change or compute things with our nice camera ?

cam2.setInternalParameters(Mat::eye(3,3,CV_64F));//set the internal parameters of cam2...

//If we want to do some steps with particular methods, we can then doing it like that:

//Example: use SURF points detections and BruteForceMatching

SURFDetect algoDetect(params);//See documentation for params details

cam.setDetectAlgo(algoDetect);//Now when points are needed for a FoV from cam, SURF method will be used.

BFMatcher

cam.setMatchAlgo(matchsAlgo);//Now if we need matching, brute force will be used.

vector<vector<Point3f>> objectPoints=???;//3D points of the calibration pattern in its coordinate system...

cam1.calibrateCamera(objectPoints)//Compute intra and extern parameters using images cam1 have (using cv::calibrateCamera)...

What can we get from a camera ?

cv::Mat K=cam3.getInternalParameters(CV_PROJECTIVE,CV_FM_RANSAC);//get the internal coefs of cam3... The parameter CV_PROJECTIVE says that if internal coefs are not computed then try to estimate the coefs using cv::cvFindFundamentalMat and cv::decomposeprojectionmatrix (We can find projection matrices using decomposition of F)

//Of course, this estimation can be upgraded to perspective or metric recontruction if wanted using different parameters... Details not yet found.

cv::Mat img=cam3.getImage(0);//get the first image of cam3...

pcl::PointCloud<pcl::PointXYZ> world=cam3.getAllObjects();//get all objects found with cam3...

How can we create field of views ?

FieldOfView p1=cam1.getFoV(3);//Get the 3rd field of view from cam1

FieldOfView pNew("pictX.jpg");//Create a new FoV. This will create a new empty camera

pNew.setCam(cam1);//set cam1 as the camera of pNew (equal to p1.getCam())

FieldOfView p2=cam.getCurrentFoV();//Get the current FoV (for video or picture sequences, increment the frame)

OK, but if we have different devices (2 or more differents intra params), like cam1, cam2 and cam3: how can we compute the differents parameters of the two cameras?

Two options :

If we have several images for the differents cameras, we can compute intra and extern parameters, object points for each camera and then merge them:

cam1.compute3DObjects();

cam2.compute3DObjects();

//Now merge the two objects:

Camera::merge({cam1,cam2});//the parameter will be a vector of Camera. Each camera inside the vector will be updated!

Ok, and what for seeing theses points/meshes/textured objects?

//If we want to show all objects of cam1 as viewed by the first FoV:

cam1.showObjects("myWindow",cam1.getFoV(0));

//Or using particular position (position is a 3*3 matrix):

cam1.showObjects("myWindow",position);

//Or with an animation reproducing the camera motion:

PointDeVue::showObjet(world,cam);